Multimodal learning : Learn from Different Modalities in an Explainable Way

ABSTRACT :

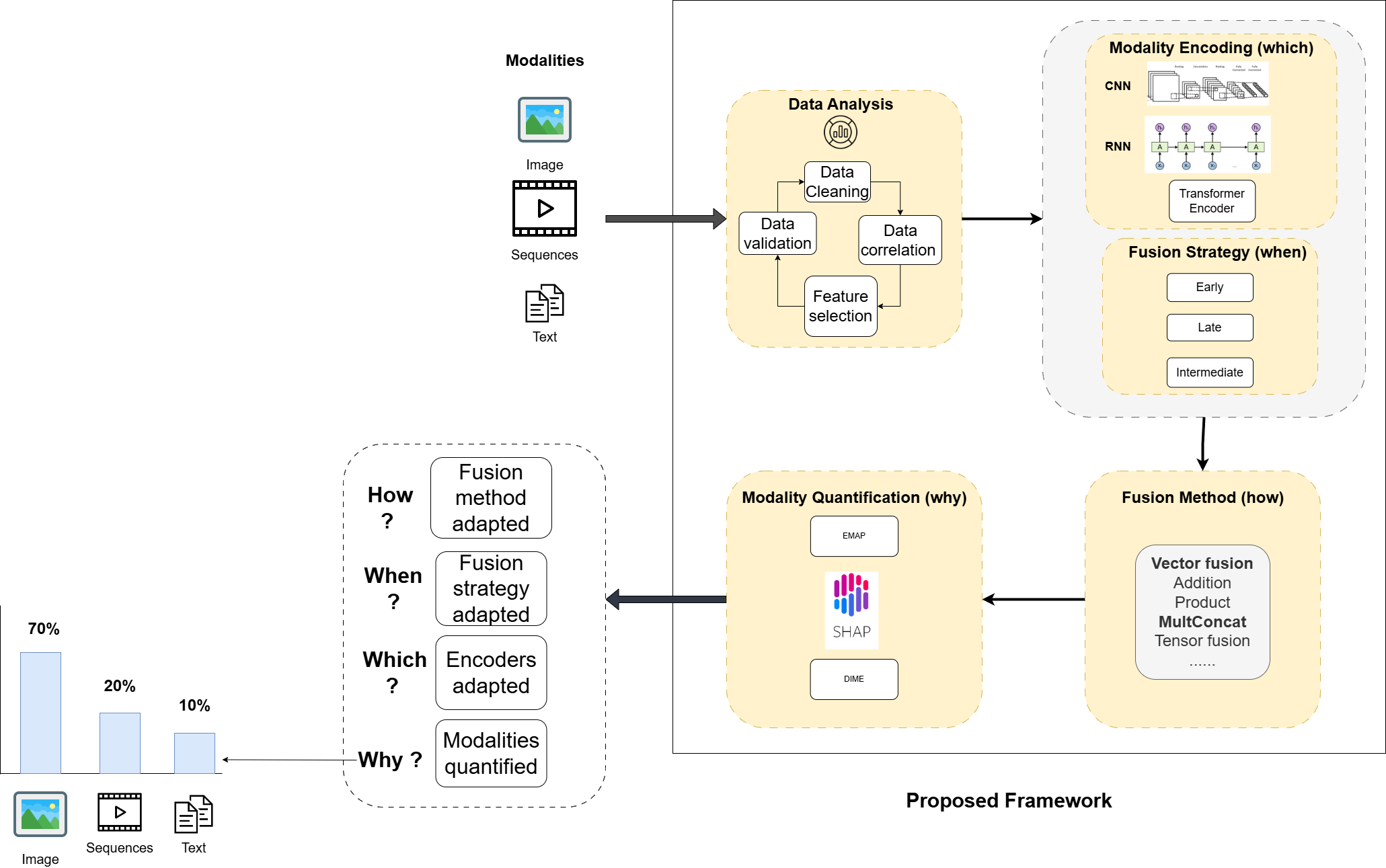

This thesis embarks on a captivating exploration of multimodal learning algorithms, inspired by the human brain's remarkable capacity to combine a myriad of sensory inputs. We delve into three application areas: customs fraud detection, "InfraSecure" a railway construction safety project, and computer-assisted diagnosis for osteoporosis pathology. Our aim is to develop efficient multimodal learning algorithms that elevate the performance of existing unimodal solutions and advance explainability in artificial intelligence. Our research spotlights the importance of robust fusion methods and effective modality encoders while highlighting key challenges in the field, including the integration of diverse data sources and the need for model transparency. The study concludes with the implementation of our proposed fusion method for predicting customs classification, thereby demonstrating the practical applicability of multimodal learning. Additionally, we identify areas that require further research, paving the way for future advancements in this field.

KEYWORDS : Multimodal fusion, Representation learning, Deep learning, XAI

Xavier Siebert

Integrating Machine Learning and Dynamic Digital Follow-up for Enhanced Prediction of Postoperative Complications in Bariatric Surgery

Obesity Surgery, 1-8, 2025

Access publicationMultimodal Approach for Harmonized System Code Prediction

arXiv preprint arXiv:2406.04349, 2024

Access publicationFuDensityNet: Fusion-Based Density-Enhanced Network for Occlusion Handling

Proceedings Copyright 632, 639, 2024

Access publicationComparison Analysis of Multimodal Fusion for Dangerous Action Recognition in Railway Construction Sites

Electronics 13 (12), 2294, 2024

Access publicationA review and comparative study of explainable deep learning models applied on action recognition in real time

Electronics 12 (9), 2027, 2023

Access publicationSimilarity versus Supervision: Best Approaches for HS Code Prediction

ESANN, 2023

Access publication