🚀 Deep Learning Enthusiast - Explainable AI Advocate - Aspiring MLOps Expert

Passionate about pushing the boundaries of Deep Learning, Explainability, MLOps at the intersection of research and real-world applications.

🔍 PhD in Explainable Deep Learning | My research explored making AI models more transparent and reliable.

💡 Future Vision | Beyond research, I am diving into MLOps, bridging the gap between cutting-edge AI models and scalable, production-ready solutions. My goal is to build robust, explainable, and efficient AI systems that drive real impact.

Towards Reliable Explanations of Deep Neural Networks – Explainable Artificial Intelligence for Vision and Vision-Language Models

ABSTRACT :

Today, we are fortunate to live in an unprecedented era. Day by day, humanity develops a technology that surpasses its own capabilities, revolutionizing and advancing the world at an extraordinary pace: artificial intelligence (AI), challenging our ability to regulate and comprehend it. This rapid progress has been largely fueled by deep neural networks (DNNs), whose increasingly complex architectures have significantly improved performance and precision. Additionally, the rise of transformers and their various adaptations, such as Vision Transformers (ViT), multimodal models like Vision-Language Transformers (VL), and large language models (LLMs), represent a new frontier of complexity. However, these advances have come at the cost of reduced interpretability, raising critical concerns about transparency and trust. Amidst this rapid evolution, ensuring the transparency and reliability of AI systems has become a pressing priority, particularly in high-stakes applications. This thesis addresses this challenge through the lens of explainable AI (XAI), a field dedicated to elucidating the decision-making processes of AI systems to ensure that they are interpretable and trustworthy.

The central aim of this work is to develop and guide towards more reliable explanations for neural networks, with a particular focus on XAI for vision tasks. Through this approach, this research seeks to lay the foundation for building more robust and trustworthy AI systems in the future. This thesis is structured around three major contributions:

1. Bias Detection and Mitigation: The thesis investigates how biases in convolutional neural network (CNN) models can be identified using XAI methods, and then explores strategies to mitigate these biases. A case study on two datasets (X-ray lung; biased colored digits) illustrates how representation bias impacts model understanding and offers pathways for improvement.

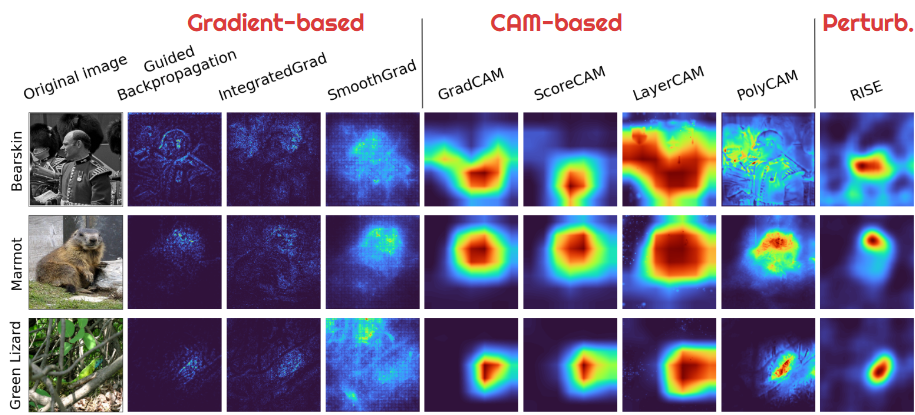

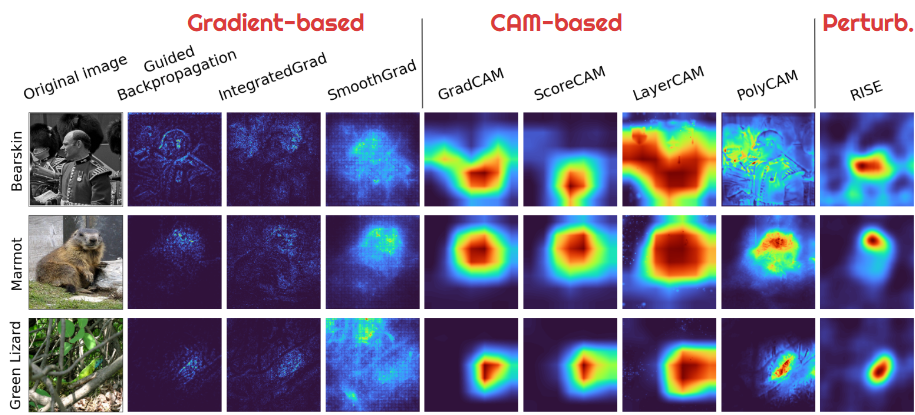

2. Evaluation of Explainability Methods: A comprehensive framework is proposed for selecting and evaluating XAI methods using explainability metrics. This framework is applied to convolutional and transformer-based neural networks, providing insights into their interpretability and correlation, and exposing limitations in current evaluation practices.

3. Advancing Explainability for Novel Architectures: A novel explainability method is introduced for Vision Transformers, more reliable than its perturbation-based counterparts, along with an adaptable extension for multimodal models handling diverse data types such as images, text, audio signals, etc. Our results stand out consistently by securing top rankings across various state-of-the-art metrics.

By addressing critical challenges in bias mitigation, method evaluation, and architectural adaptability, this work aims to ensure that AI technologies can be safely and confidently integrated into society, fostering trust in their potential.

KEYWORDS : Explainable Artificial Intelligence, XAI, Computer Vision, Deep Learning, Explainability, Interpretability

An Efficient Explainability of Deep Models on Medical Images

Algorithms 18 (4), 210, 2025

Access publication

Multimodal Approach for Harmonized System Code Prediction

arXiv preprint arXiv:2406.04349, 2024

Access publication

A review and comparative study of explainable deep learning models applied on action recognition in real time

Electronics 12 (9), 2027, 2023

Access publication

An experimental investigation into the evaluation of explainability methods

arXiv preprint arXiv:2305.16361, 2023

Access publication

Explainability and evaluation of vision transformers: An in-depth experimental study

Electronics 13 (1), 175, 2023

Access publication

Explaining through Transformer Input Sampling

Proceedings of the IEEE/CVF International Conference on Computer Vision, 806-815, 2023

Access publication

An Experimental Investigation into the Evaluation of Explainability Methods for Computer Vision

Communications in Computer and Information Science, 2023

Access publication

An experimental investigation into the evaluation of explainability methods for computer vision

Joint European Conference on Machine Learning and Knowledge Discovery in …, 2023

Access publication

Similarity versus Supervision: Best Approaches for HS Code Prediction

ESANN, 2023

Access publication

Explainable deep learning for covid-19 detection using chest X-ray and CT-scan images

Healthcare informatics for fighting COVID-19 and future epidemics, 311-336, 2022

Access publication

Explainable deep learning for covid-19 detection using chest X-ray and CT-scan images

Healthcare informatics for fighting COVID-19 and future epidemics, 311-336, 2021

Access publication